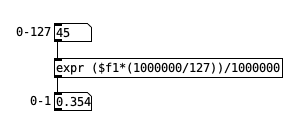

Because $f1 is already floating point, you gain nothing by multiplying by 1000000. That might be useful in the integer domain, but floating point numbers "shift" their full precision based on the magnitude, so it's a wasted operation.

Then, dividing by 1000000 is the same as multiplying by 0.000001. These are convenient numbers in decimal, but they are repeating fractions in binary floating point (like 1/7 = 0.142847142847...) so... you thought multiplying and dividing would give you extra precision but in fact, you've just introduced extra rounding error. This probably explains why it never reaches 1. (This is a very common misconception with floating point numbers. We tend to assume that a finite decimal should also be a finite float, but computer floats are binary. Any rational number where the denominator's prime factors are 2 and 5 will be finite in decimal, but a factor of 5 in the denominator will create an infinitely repeating binary representation, so 0.1 decimal is 1.1001100110011... x 2^(-4) in binary -- it's no more precise than 0.3333 in decimal or binary.)

hjh