-

andresbrocco

posted in abstract~ • read more

andresbrocco

posted in abstract~ • read more@Obineg sorry for the delay!

Actually I'm not the one that designed the filters. I just copied the coefficients from a research published by IRCAM! That is mentioned at the readme file.

Regarding the LR phase difference, that's also a parameter provided by IRCAM (ITD), which I implemented using 2 delay~ lines, one for each channel.

Have you tested out? What do you think of the performance? Does it sound good for your ears?

-

andresbrocco

posted in abstract~ • read more

andresbrocco

posted in abstract~ • read moreAhh, indeed! I forgot to mention that

The goal is to be pure vanilla, so I'll replace it with:[*~ -1]

|

[+~ 1]Thanks @FFW and @alexandros

-

andresbrocco

posted in abstract~ • read more

andresbrocco

posted in abstract~ • read moreHey @alexandros. that's not a typo!

That's short for [expr(1-$f1)]: the exclamation point reverses the order of the inlets when preceding basic math operators + - * /

That's short for [expr(1-$f1)]: the exclamation point reverses the order of the inlets when preceding basic math operators + - * / -

andresbrocco

posted in abstract~ • read more

andresbrocco

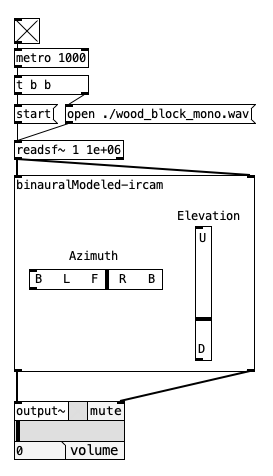

posted in abstract~ • read moreAn efficient binaural spatializer

The "binauralModeled-ircam" object is a pure-vanilla implementation of the binaural model created at IRCAM.. An example of its capabilities can be found at this page.

The object is found at my repository: https://github.com/andresbrocco/binauralModeled-pd

Main concept

Basically, it applies ITD and approximates the HRTF to a series of biquad filters, whose coefficients are avaliable here.

Sample Rate limitation: those coefficients work for audio at 44100Hz only!

Space interpolation

There is no interpolation in space (between datapoints): the chosen set of coefficients for the HRTF is the closest datapoint to the given azimuth and elevation (by euclidean distance).

Time interpolation

There is interpolation in time (so that a moving source sound smooth): two binauralModels run concurrently, and the transition is made by alternating which one to use (previous/current). That transition occurs in 20ms, whenever a new location is received.

Interface

You can control the Azimuth and Elevation through the interface, or pass them as argument to the first inlet.

Performace

Obs.: If the Azimuth and elevation does not match exactly the coordinates of a point in the dataset of HRTFs, the object will perform a search by distance, which is not optimal. Therefore, if this object is embedded in a higher level application and you are concerned about performance, you should implement a k-d tree search in order to find the exact datapoint before passing it to the "binauralModeled-ircam" object.

Ah, maybe this statement is obvious, but: it only works with headphones!

-

andresbrocco

posted in technical issues • read more

andresbrocco

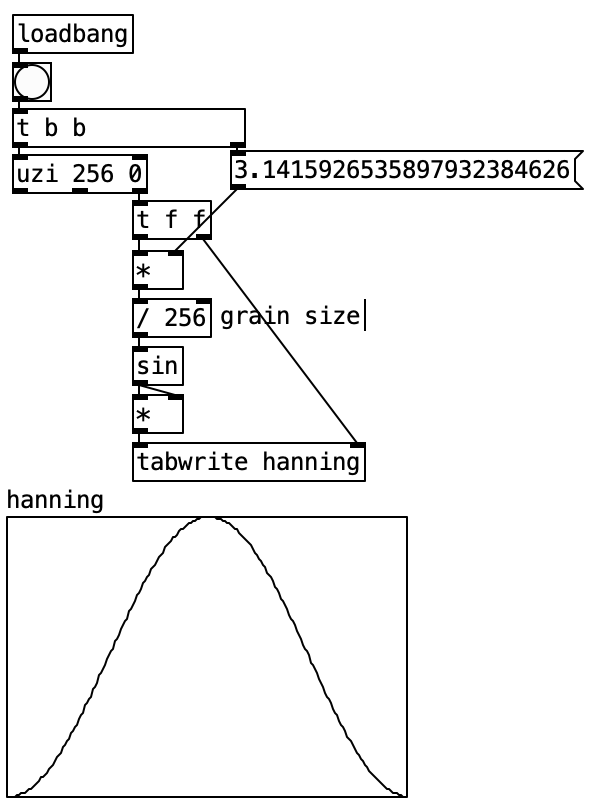

posted in technical issues • read moreJust to document it here. If you want to create a hann window and store it in an array, you can do the following:

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read more@ddw_music I totally agree with you. That makes me nervous sometimes

Maybe @Miller Puckette could change this behaviour in future releases? I would be glad

-

andresbrocco

posted in patch~ • read more

andresbrocco

posted in patch~ • read moreThanks for replying, @il-pleut I've edited the original post!

-

andresbrocco

posted in patch~ • read more

andresbrocco

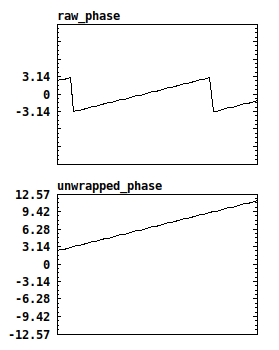

posted in patch~ • read moreHello, I made a patch to undo what [wrap~] does.

It basically remove discontinuities when the signal jumps from -pi to pi or vice-versa. As shown in the image below:

I personally needed for unwrapping phase before applying complex logarithm when I was trying to implement liftering in the complex cepstrum... (What didnt go so well, by the way...)

Hope it's useful for someone else - The patch is pure vanilla.

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read moreThere is an object in the iemmatrix that implements the fletcher-Munson curve (equal loudness contour)... Take a look!

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read more@solipp, I want to get high resolution in both frequency and time.

So if I do an FFT after every block, I get high resolution in time, but the block is too small, so I zeropad it to increase frequency resolution.

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read morethanks @whale-av!

Actually [mux~] is not missing, but [for++], [pddplink] and [lp6_cheb~] are.

Anyway, I got what's going on, but @katjav didn't zeropad the block. She actually discard part of the block by multiplying it by zero.

I've made a patch that increases the block size by a factor, but also overlaps by that factor, to keep the same dsp rate. Inside, it just multiplies the old blocks with zeros, so the result is:

[ 0 0 0 . . . 0 0 0 newBlock]

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read moreHi, is there an easy way to do zero-padding in the dsp block, to get a better frequency resolution in a fft~ ?

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read moreThank you @whale-av, but after studying and understanding a bit more, I realized that I need something more than that dwt~ object, so if everything works, in a few weeks I'll be publishing it here =)

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read moreHello, I'm struggling to find a well documented object that implements a discrete wavelet transform (DWT).

I've found the [creb/dwt~] object, but the help patch really didn't help much...

The only (unnofficial) document I've found that used this external is this work: Audio-Manipulations-in-Wavelet-Domain.pdf

Does anyone have another external to share, or even good literature on this subject, so that I can understand that external or code one my own?

Thanks very much!

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read more@djpersonalspace I think that's a question for a python forum, or even StackOverflow haha

But yes, you've installed for old version.... try apt-get install python3-beautifulsoup4 instead of just python-beautifulsoup4....

something like that would work

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read more@JJLloyd No, your solution is fine also! I think @thisguyPDs has a misconception, not you...

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read moreIt depends on your sensors/whatever.

You could get sensor data from whichever microcontroller/microprocessor you are using and send it via network to PD, and receiving with [netreceive] object.

To get values from the internet I would make a script in another language (python?) to scrape that data from the webpage or API, and then route it to your PD patch via network (and receiving with [netreceive] object also).

-

andresbrocco

posted in technical issues • read more

andresbrocco

posted in technical issues • read moreI think you have a misconception on how signal works: The signal that you are sending in [s~] is sent again and again in every DSP cycle, regardless of whether it had changed or not.

So if you want to output a bang after every DSP cycle, you can use bang~.

But if you want to output a bang after any change in the signal, you can use this patch I've made: signalToBang.pd