It seems to have something to do with the in/out buffer? But is not the same as the block size? Looking for a technical, under-the-hood explanation. Or a referral to any existing documentation... Thx

-

What's the 'delay' in preferences?

-

@bklindgren Yes, it is the buffer, so it will add latency. For an internal soundcard it might need to be quite large... 30 - 80msecs... so that the soundcard has time to process and probably resample to the internal sample rate of the computer..... for example 48K in Windows. When the computer does not have enough time to process the buffer before it is refilled then you will get audio dropouts (clicks).

With a professional sound card you might be able to reduce it to 2 - 3msecs.

David. -

Very helpful. Thanks for the reply.

So the purpose of this buffer is to accommodate the IO hardware?

And does this type of buffer have a name?

-

@bklindgren It is usually called a buffer and set as "buffer size" in samples...

Pd is unusual in calling it "Delay".

The buffer size in samples divided by the sample rate equals the delay (latency) in seconds.Of course the block size of 64 samples gives internal latency.

You only need to worry about latency for live work and live monitoring in a studio,

A latency of 2.9 milliseconds (128 samples at 44100Hz) is the same as moving an instrument just under 1 meter away from a listener...... so not really noticeable..... (speed of sound approximately 343 meters per second in air)

David. -

@bklindgren From the manual:

The "delay" is the nominal amount of time, in milliseconds, that a sound coming into the audio input will be delayed if it is copied through Pd straight to the output.

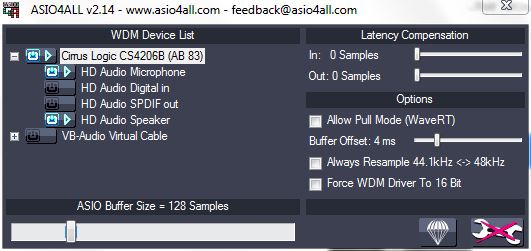

I've always assumed this includes the time it takes for the audio interface to fill Pd's input buffer, the time it takes for Pd to process a straight-through connection, the time it takes for Pd to fill the output buffer, and any OS-specific/driver-specific/audio-framework-specific overhead. I suspect that some of these categories overlap. So I wouldn't say "it's the buffer" exclusively, but in as much as a larger buffer takes a longer time to process, then yes, buffer size is probably a factor. For example, with a 64 sample buffer, I'm able to set delay much lower if I select an ASIO driver than if I select one of the Windows built-in drivers. -

Thank you both for the clarification

I'm writing a paper where I measure the latency of an instrument I built that synthesizes sound with PD, and was wondering what to call this 'delay' parameter. I'm used to seeing it described as something like an 'I/O Vector Size' as in Max...

Thanks again for the help

-

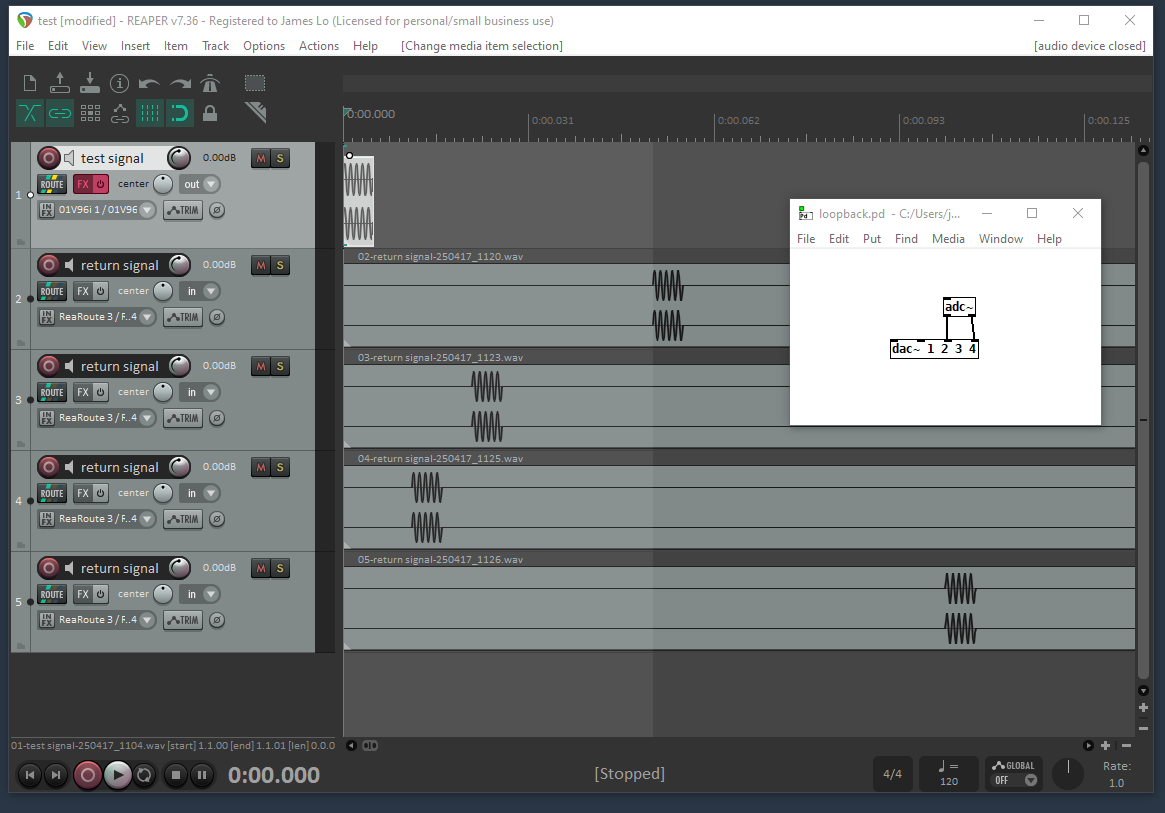

@bklindgren Maybe "expected I/O latency" or "realtime processing budget"? I say that because if the actual latency is greater, you'll hear clicks as blocks are dropped or corrupted. But the expected latency can be set much greater than the actual latency without problems. Look at the following test:

REAPER sends the test tone on track 1 out its audio loopback driver to Pd, and Pd returns it on the next 2 channels in the loopback driver. I recorded the return signal on tracks 2 through 5 using Pd delay settings of 50, 20, 10, and 100 ms, respectively. The delays on the return tracks are 52, 21, 11, and 101 ms respectively. -

delay (msec) is to create the total buffer size. Its important for round trip latency where PD is looping the input to the output - the delay (msec) gets added with the block size (which for compatibility with other patches just keep at 64)

Usually JUCE based programs just give you a "block" of audio, PD is more under the hood about the DSP, the 64 block is a ring buffer that the main patch is at (which is why you have to subpatch to reblock) and the delay msec is padding for the CPU.

Normally you only run into it when you have to up the amount with the sine playing in 'Media, test audio and midi' when you first install PD until it stops sounding scratchy

but also theres another patch in the help browser under 7.stuff/tools/latency you can loop back the output of your audio to the in and play around with lowering it. Either with a loopback function on a dac, or like a wire plugged from out ot line or just have the mic and speakers setup where they could cause feedback if turned up too loud. Tests on the internet for mostly vintage DACS doing round trip latency were actually done in PD.

playing around with it I found Intel macs can go down to 3, and prosumer asio (like an old external edirol dac) can go down to the lowest, 2. Its up to the low level driver to give you the real round trip latency.

usually as long as you can get the sine wave to play in Media.. test audio and midi you are good. Its really only for processing audio in with minimal audible latency - super small delays go off the rails the second you really hit the CPU with polyphony, delay lines, small block sizes.

For a while I had it at as low as it was without being scratchy, but as I got into more complicated (and bloated) synth designs I just kept mine at 50 because when it was having problems more latency than that never helped but less could cause stutters/scratchy sounds.

Linux and Windows are usually set by default to 50 or 100 tho I dont have a problem above 23, offhand I think intel macs were set to 5 or 10. the new arm macs can run into a problem where the old default was too small and should have it higher as well. So the real winners were old intel macs.

use callbacks is only for some older audio drivers in linux

-

Thank you @fishcrystals for the explanation and @jameslo for the test results. Very helpful!