I am trying to send real time audio data from Python to PD for processing and then send the processed data back to Python. I previously used connecting python and PD with Soundflower virtual sound card, but there was certain latency and distortion that didnt work very well for me. In order to achieve higher transfer rate and lower latency I thought of using UDP. I tried, however PD receives UDP data (bit number integer) in the format of two integers (eg. 520 is sent as 8 2 - 2x 256 + 8 ), which I send in chunks of 1024. Processing the data back into plausible sound data in range of -1 to 1 and sending them out using [sig~] seems to be beyond PDs processing capability, PD seems to output only the first integer of the chunk. Is there any more direct way to capture audio thru UDP? thanks

-

Receive audio from Pythjon thru UDP.

-

@Schlamborius For the Python side...... https://stackoverflow.com/questions/75430663/streaming-real-time-audio-over-udp

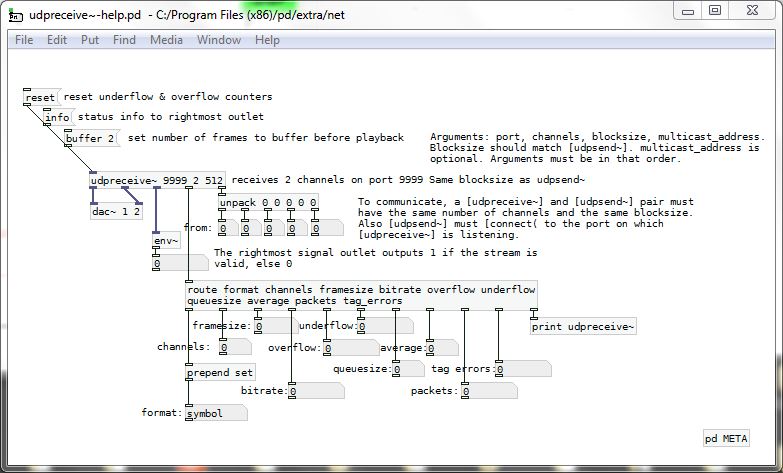

For Pd use [udpreceive~] from the "net" library.

Look for it through Help... Find externals... in the Pd menu bar.

It exists and works for a 64-bit RPI.... I don't know for other OS's.

If not then 32-bit Pd extended will be an option..... it is bundled with the download.The lower the chunk size (probably frame size in Pd) the lower the latency.

Best to have a high buffer size though for [net/udpreceive~]..... I remember 15 is the maximum.

David.

-

Did you try Jack?

-

@whale-av thanks! I finally managed to send audio from Python to pd, where i can receive it. the problem, though, is - that the audio i send (I use some music for testing that i send to python thru virtual sound card) seems to be distorted when played out from pd's [dac~] unless its played at a very low volume. Any slightly higher volume turns the sound very fast to distorted noise. This seemed to me to be a problem with unmatching bitrate, but I didnt find any proof for this.

What I have managed to find out until now is, that in order to send the audio from Python to pd [udpreceive~] object, it is necessary to send it in a certain format (which i found out by receiving data from [udpsend~] to Python) - the format being two alternating messages sent together - first one containing "header" - set of 24 integers somehow defining the bitrate, channel count, chunk size etc., second one containing one chunk of audio data. I dont understand much the meaning of the 24 integers, but what i did is that i sent 16 bit audio with 512 blocksize thru [udpsend~] to Python and copied the header to use it for reverse direction (which i need). I send the data thru pyaudio in a 16 bit format and use the header I copied from [udpsend~]. Even the [udpreceive~] help file shows the incoming audio format as 16 bit, so I suppose it is not a bitrate problem. Do you have any idea why this is happening? thanks. -

@Schlamborius Pd audio out to [dac~] has to be values between +1 and -1. Outside that range there will be distortion with most soundcards (there is a very tiny margin of leeway).

As you are able to produce undistorted output I think that there is nothing wrong with the UDP stream.

You say that there is no distortion when Pd sends very low levels to the [dac~]...... maybe when there is distortion those values are outside that range, and so the only option is to increase the analogue levels post [dac~] if you need to listen at a higher volume.

You can use [print~] to see in the console the values of 64 samples as you bang its inlet.

Let us know what you find.

If you want better resolution for post processing you can send 32-bit (see [udpsend~-help]...) but feeding the dac 16-bit is good enough for humans.

Pd internal processing is 32-bit floating point.

David. -

@Schlamborius python3 sends UDP packets encoded to binary now in order to send unicode vs python2's ascii. Anything bigger than a byte (ascii 0-255) gets turned into multiple bytes. It makes it a lot less straightforward. A simple approach is to scale the audio to 0-255 from python (8 bit) - send it from python at 1/44.1 msec(sec/msec?I forget). then in PD scale that to -1 to 1 and put it into vline~

-

@fishcrystals also there's [netreceive] for udp and [fudiparse] or [fromsymbol] maybe with [list trim] first to deal with strings vs numbers in pd vanilla now but .. whew.. you get something working stick with it.

-

@whale-av yes, 16 bit should be enough. the values are not exceeding 1 even in the most extreme distortion. This should be ok.

I tested the stream sending stream of constants instead of signal and I found out, as @fishcrystals outlined, that [udpreceive~] processes correctly only values in range -128 (interpreted as -1) to 127.9999 (interpreted as +1). anything exceeding it will be processed as modulo 128 (eg. 1000 will be processed as 104 ==> 7*128 + 104 ). Scaling the audio to 8 bit in Python is something I can't do (my project is data-bending of images and I need higher data resolution than just 255 values.) Is there anything i can do to maintain my resolution (16bit) and receive that with [udpreceive~]? using non-tilde objects as [udpreceive] or [netreceive] in pair with list processing didnt seem possible to me (pd just got overloaded with too much data). -

@alexandros yes, but I didnt find any way yet to send live stream of audio to the JACK input using the JACK API for Python, and there seems to be no documentation/functional code for this type of problem online.

-

You can try the Pyo module for DSP in Python. I'm using it with Jack, and can send audio to and from it. https://belangeo.github.io/pyo/

-

@Schlamborius I am wondering why you want to do this.

There are a lot of audio modules for Python, including for streaming..... https://wiki.python.org/moin/Audio/ ...... although maybe platform dependant.

And Pd can do quite a lot.

Why do you wish to move audio between the two programs in "realtime"?

David. -

@whale-av My goal is to write a program that can encode an image/video into sound format (data bending), send the data in real time to Pd, where I can process the "audio" consisting of the raw image data using Pd effects and then send it back to Python where can I convert that to live video again.

I managed to do this already, using virtual sound card (Soundflower) as the connection between python and Pd. This worked, although the image seems a bit scrambled and distorted when send thru this "chain" uneffected,. and the frame rate, at which the image can be processed (and thus the video streamed) is very low, because it depends on the maximum sample rate, which is 192kHz for Soundflower(that is roughly 2,3 FPS for image size of 1000x800).

My former professor of programming suggested to use UDP or TCP for faster and more reliable transfer of data, so now I am trying to do this.

But let me know, if this is something that can be done purely in Pd (GEM). I have no experience with GEM though, thats also why i chose Python. -

@Schlamborius anything gem has with a ~ is similar to analog video synthesizer art. There's also a three dimensional scope.

I haven't tried video or maybe just flipping frames / animation yet. But here I am sending a block of video information out as audio for R G and B (ignoring alpha), running it through low pass and high pass filters, then turning it back in to video

In order to hear it (it sounds bad but you probably knew that) you have to put it in a [pd subpatch] with an [outlet~] because [dac~] has to live on the ground floor of a patch and [dac~] also can't be reblocked.

I really had trouble getting an image format it was happy with, ended up with uncompressed .tiff using GIMP. Also it helps to load the image first before opening the gem window.

pix_pix2sig~-helpIVEBEENSHREKED.pd

-

@Schlamborius As you are comfortable with code, and GEM has problems currently on some platforms, I suggest that you take a look at Ofelia....... https://github.com/cuinjune/Ofelia

Based on Lua, you might find it very easy to script.

The library for Pd for your platform can be found on Deken....... Pd menu... help... find externals... ofelia...

You should be able to do everything then within Pd.But there are a lot of options you could consider and........ https://videogameaudio.com/SFCM-Apr2020/SFCM2020-PureDataAndGameAudio-LPaul.pdf .... is well worth reading.....

As you are familiar with Python you could consider embedding your Pd patch...... https://github.com/enzienaudio/hvcc

David.

P.S. I don't really know what I am talking about with programming languages (my last experience was Fortran 74), but hope my gibberish will help. -

Since @whale-av suggested embedding Python into Pd, I'll mention Pyo again, as it includes a Pd external to load Pyo code, so Python actually I to a Pd patch, and output its audio output straight into your Pd patch.

-

@alexandros thank you. I have PYO but I cant find any pd externals for that. Do you possibly have any info about that?

-

In Pyo's sources, in the embedded/puredata directory. https://github.com/belangeo/pyo/tree/master/embedded

You'll probably have to compile yourself. -

@alexandros thanks, i tried to do it, but it seems to be obsolete now. when compiling (make command in puredata folder), terminal shows:

clang: error: unknown argument: '-ftree-vectorizer-verbose=2' which is obsolete flag according to stack overflow.

I tried to delete line '-ftree-vectorizer-verbose=2' from Makefile, but the error persists. Is there anything i can do about this? I am afraid that I am reaching the limits of my sparse programming knowledge by now, so sorry for stupid question. -

@Schlamborius I'm not sure. I can compile the object with a simple

make, but I can't load it, as I get some error about _Py_NoneStruct, which the developer of Pyo can't reproduce, so I have abandoned this for now, and every now and then I keep thinking that I have to retry.

How did you compile? Which Pyo version do you have? -

I have latest version of Pyo, I compiled using make command.

I am afraid that I cant make it work. But I am still thinking - there MUST be a way how to tranfer data from python to PD and simultaneously back to python without being limited with limited sample rate and latency and other problems of virtual audio devices(the only way I managed it to work so far). I was now again trying to use mrpeach's [udpreceive~] and [udpsend~], using now 3channel 8bit communication to send each color channel separately and at the same time not be limited with the fact that [udpreceive~] receives correctly only the first byte of the python format (as @fishcrystals states, it receives correctly only values 0-255, which is 8 bit format). But the format that [udpreceive~] requires seems totally cryptic to me when it comes to multichannel audio and I didn't manage to reverse engineer that using [udpsend~] either. There is no documentation for this and I didn't even find any contact on Martin Peach himself. So I am completely lost now. @alexandros let me know if you managed to make the Pyo pd embedded work. And if somebody has any ideas how I could continue, or at least a contact to mrpeach, that would be very appreciated and helpful, because I really feel there has to be a solution to this fairly simple question. -

you can see the

udpreceive~source here: https://github.com/pd-externals/mrpeach/blob/main/net/udpreceive~.c

if each pixel is a sample, wouldn't the samplerate be 800k*framerate for 1000X800? that's way more than 192k...

fundamentally your biggest issue is transferring that much data across a network, even a local one. (even if you do pack each color channel as 8-bit values into 1 24-bit int though it might get you closer if you separate the data over multiple channels)

Anyways I'm kind of confused: how is the source 'audio'? it might make sense to think of it as sending video, then convert to/from audio in pd

There might be some GEM-based objects to do that part.. maybe you can stream to/from vlc or something

you could also consider using libpd to use pd from within python, but then externals might be tricky to get working idk