-

freakydna

posted in patch~ • read more

freakydna

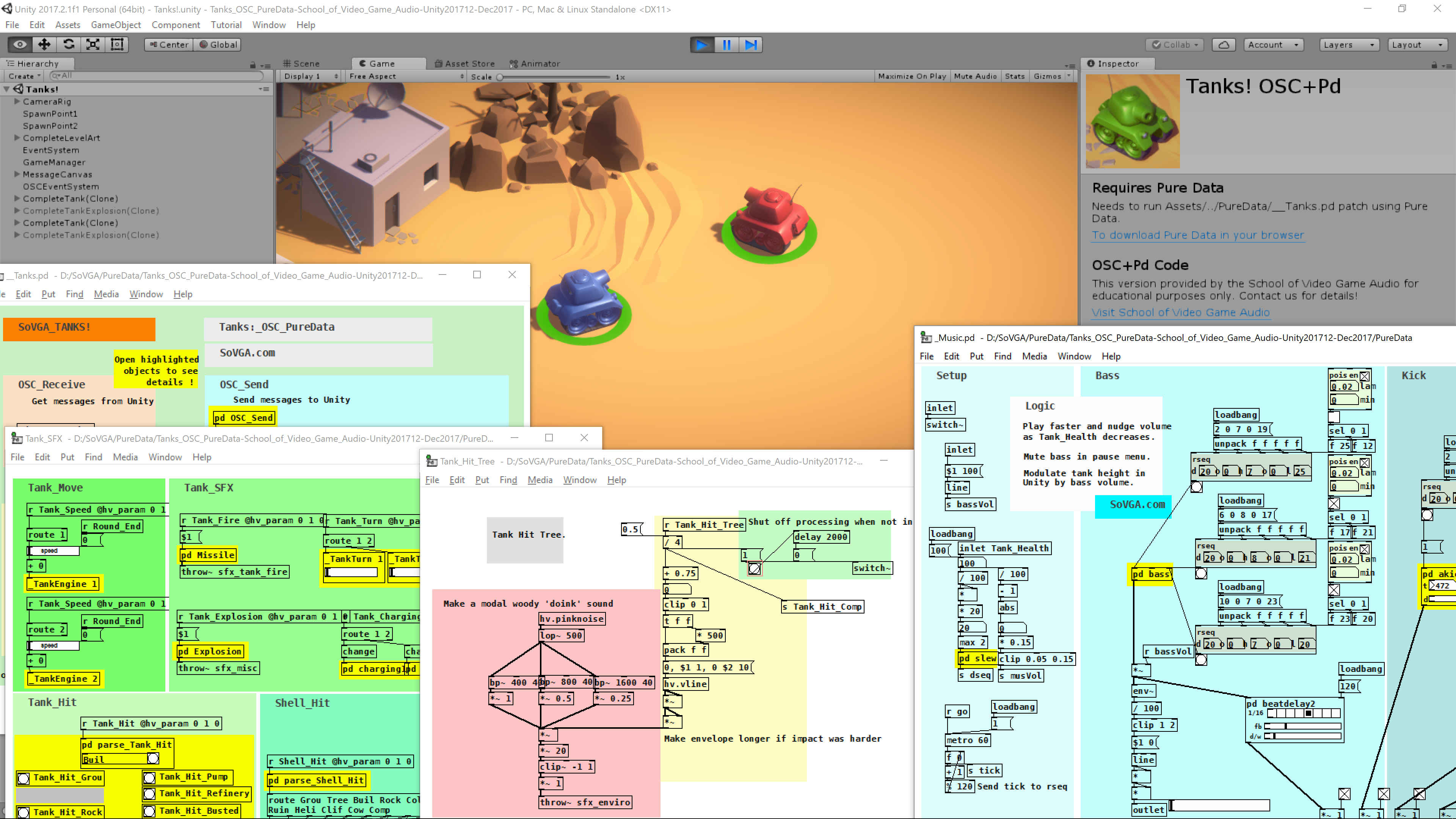

posted in patch~ • read moreThe School of Video Game Audio has made a new Pd game audio project available for download that combines Open Sound Control (OSC) with Unity to trigger sounds in Pure Data. Downloading Unity isn't required as standalone versions for Mac and PC are available but a recent version of Pure Data is necessary. The project has over 20 synth patches plus a generative music patch to generate all of the audio for the game using synthesis.

Quick video overview: https://twitter.com/SchoolGameAudio/status/941477419898114048

The download is available on the school's web page at: School.VideoGameAudio.com.

-Leonard

SoVGA.com

-

freakydna

posted in news • read more

freakydna

posted in news • read moreGreetings all,

I have just posted a collection of student patches for an interaction design course I was teaching at Emily Carr University of Art and Design. I hope that the patches will be useful to people playing around with Pure Data in a learning environment, installation artwork and other uses.

The link is: http://bit.ly/MI8AjN

or: http://videogameaudio.com/main.htm#patchesThe patches include multi-area motion detection, video games, collision detection, facial tracking, 3D object manipulation, visualizations, beat detection, motion detection, menu systems, spectral analysis, arduino projects, movie mixing, augmented reality and more...

Cheers,

Leonard

Pure Data Interaction Design Patches

These are projects from the Emily Carr University of Art and Design ISMA 202 Interaction + Social Medial Arts course for Spring 2012 term. All projects use Pure Data Extended and most run on Mac OS X. They could likely be modified with small changes to run on other platforms as well. The focus was on education so the patches are sometimes "works in progress" technically but should be very useful for others learning about PD and interaction design. As such, not all projects will run without issues and are simply provided "as is" for educational use.

NOTE: This page may move, please link from:

http://www.VideoGameAudio.com for correct location.Instructor: [Leonard J. Paul

[Students: Aaron, Amanda, Andy, Brooks, Emily, Erim, Eunice, Graham, Ke, Kristine, Maryam, Melody, Sarah, Scott, Sebastian and Sinae.

Aaron-Vertex_Control-PC.zip

Vertex Control is an interactive polyhedron manipulator that resembles a 16-bit cartridge video game. The viewer is presented with a video game style menu system with appropriate instructions. After selecting the basic polyhedron set Platonic Solids or the more complex Catalan Solids the user manipulates the shape with a game controller joystick. To further the wonder of a retro video game experience, a ten second burst mode can be triggered creating a motion blur effect on the solids.

Features: 3D objects, joystick input, menu systemAmanda-Hero_Novel-MAC.zip

Hero is a visual novel game using the options and selection functions. This program does not require much set up as it is a fairly simple program, as the focus is more on the art and story of the game as apposed to the functions.

Features: interactive novel, menu system, keyboard inputAndy-LED_Spectrum_Analyzer.zip

The technical part of this project is the most complex part of this project, which firstly, I need to purchase regular LEDs and resistors (in certain values) and they must be matched to each other. Also it's need a little bit of mathematical practice, which it needs me to plan a little circuit map to place those LEDs, resistors, and jumpers, to connect to Arduino.

The artistic side of this project is that I would like to make an interaction between music and light(LEDs). Ideally, I would like to make a really huge wall of LEDs and let people feel a sense of clubbing. And the audio input could be changed to other style of music to change audiences' moods.

Youtube video of project

Features: arduino, FFT analysis, spectral analysis, LED, beat detectionBrooks-Wiimote_Remixer-MAC.zip

My goal is to create a musical instrument controlled by the Wiimote. The Wii controllers are the remote-shaped controllers of the Nintendo Wii which can be connected very easily to your computer or laptop by Bluetooth. In the controller there are a couple of movement sensors and an infrared camera for tracking IR-LEDs. Upon initialization, the program will play a tune that will be visualized digitally. By using the Wiimotes, the user will be able to remix and change the tune, visualization, and overall sound of the song. This will be achieved by using gesture recognition and an audio application in Pure Data.

Features: movie playback, movie mixing, remixing, audio crossfade, movie crossfade, gesture detection, wiimote, audio, videoEmily-Home_Body-MAC.zip

For this assignment, I layered 4 videos on top of each other, and used the alpha to blend the layers. The layers are controlled by audio input levels, and there are equations that govern the alpha blend based on the volume coming into the computer. When the volume is low, there is a video of a house demolition, with a webcam video in the background. I used a Chroma Key patch to key the webcam video. Overtop, there is a video of a human cadaver dissection with a computer generated video of an animation of the immune system where the immune system is compared to an army. As the volume going into the microphone goes up, the cadaver/immune system overlays the webcam, all within the dark spots of a house being demolished.

Features: audio input, movie layering, movie alpha mask, movie mixing, movie playbackErim-Continuum-MAC.zip

I used pure data with a webcam and FaceOSC software for face recognition and as a switch which interferes with multiple conditions like the sounds, video and model. The patch consists of two phases that includes two background videos, sound mixer, and a 3d model. The first phase is the inactive version of the patch, which means it doesn't get any feedback from the webcam. This part consists of the blacked out shape of the male 3D model, a noisy video loop of some clouds on the background and the white noise sound. When the patch starts to get a feedback the second phase gets activated; the background video and sound changes and the model start to move depending on the person in front of the camera. FaceOSC recognizes face through the webcam feedback and sends different numeric values of face's position and angle to pure data. I used these values to give real time motion to the 3D model to imitate the person's facial position and angle with it.

Features: facial recognition, gesture recognition, FaceOSC, movie playback, 3d model, audio processing, FFT analysisEunice-Motion_Noise-MAC.zip

The project I did was to use motion to control the different pitches and I did it using the motion detection patch from the example list as a base. I made it black and white to make it visually more contrasty. The idea was to how motions can trigger sound and to explore the "stillness" of sound as well. If there are movements, even just a little bit, there will be numerous pitches depending on the motion detector where it is hard to have a monotone since it is hard to get everyone still and not move.

Features: motion detection, video processing, intereactive audio synthesisGraham-Lost_in_Space-MAC.zip

The technical side of this patch is based around a location trigger that uses motion disturbances within the webcams focal spectrum to send bangs to the different camera directions, providing for an 'unlimited' amount of perspectives of the graphic environment. These bangs are routed through a random object before being directed at 1 of 6 possible camera directions. the bangs are also coupled with a delay bang to disengage the camera movement after an interval of between 1 and 3 seconds.

The project was conceived and presented as a performative or "feed back" style of interaction by directing the webcam at the patch's projected image, henceforth triggering bangs based on its own graphics. Alternatively, the patch can be viewed on personal computers, in this case some movement on behalf of the viewer is required to set the camera in motion..

Features: motion detection, 3d objects, real-time audio synthesis, movie texture mapping, camera motionKe_Hu-Dancing_Shapes-MAC.zip

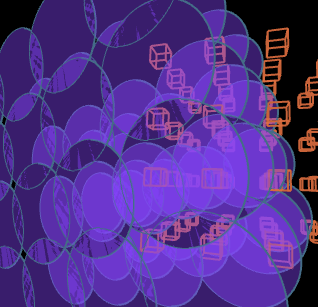

In the final project, I explore the relationship between audio and visual images, three-dimensional geometric images moving in two-dimensional space. It eventually will form the audio visualization.

For the technical aspect, the sound and music I chose is the random dancing songs from radio. So I just used "connect url" to link the url address which depends on the Internet access. In terms of visual images, I will use some cubes and circles as objects. The "repeat" and "rotateXYZ" were used to organize the cubes and circles well on the screen. The interaction between images and music will include the scale, color, the rate of image shaking.

In the early time of the development of interactive images, there were a lot of artists exploring the relationship between 2D space and 3D space, such as John Whitney and John Cage. My goal is to achieve the visual composition and transformation in order to catch up with the controlled randomness, which means, on the one hand, the images are randomly positioned in two-dimensional space, and on the other hand, they are also framed and controlled in a certain range. The audience can play this interactive audio visualization by mouse..

Features: audio mp3 internet input stream, beat detection, 3d objects, vj colour effects, audio visualizerKristine-Beauty_Bounced-MAC.zip

For my final project, I want to use the physics 2d ball script/code. The motion of sweeping away objects will be the centre of this project. Sound will also be distorted with the movement of the ball. Videos will be mixed when the ball hits the walls of the window. The idea behind the this piece is to further distort the image of beauty that is shown through media in our present day.

Features: physics simulation, spring physics, movie playback, collision detection, movie crossfading, real-time audio scratching effectMaryam-Dancing_Puppet-MAC.zip

I create an interactive game called the Dancing Puppet game. In this game the viewer has to make a sheep figure on the page to dance to the beat of the music by placing their hand on one of the 4 squares that get highlighted at the top of the page The figure will not dance unless the right places are pointed at, but the sheep doesn't necessarily point to the same square as the viewer is pointing. In terms of the artistic intentions I'm trying to illustrate the ideas of control through right and wrong and how the viewer will only be able to control the figure if they're right. But at the same time though the viewer thinks he/she is controlling the sheep the sheep reacts differently to what is expected and points at a different direction than the viewer. Maybe hinting at the plans that we make for our life thinking of doing what are write and wrong (if there is one) and the result being something we never expect. Also a hint to how we may all be very sheep like, and a puppet to destiny. On the technical side I started with a motion cell patch. the webcam is used as the main interaction tool that detect which square the viewer decides to place their hand on. The squares get highlighted to the beat of the song.

Features: video game, regional motion detection, multi-texture objectMelody-Quantum-MAC.zip

Puredata and the zip file should be enough to run this patch. When working on this patch I had a few goals in mind. I wanted to create something that related both to Science and to art, and to work on generating a 3D environment. I decided to create an artist interpretation of quantum particles popping in and out of existance while they bend the space around them.

Quantum shows particles which you can alter through the patch. They respond to music input and when forced upwards they bend a space grid bellow them. The background will also become brighter based on the users physical movement (if they have a webcam in their computer).

The technical component for this piece was combining various patches, modifying them and asking them to interact with eachother. There is a wave patch, a beat levels patch, a sphere patch, and a string patch all working to create this environment.

Features: real-time mp3 input audio stream, internet radio, 3d object, dynamic 3d objects, texture mapping, FFT analysisSarah-White_Space-MAC.zip

So the technical part of my project was just to have the sound alter the spinning rotations of the spheres and cubes. The sound can be manipulated to the participants own liking. The other aspects (like the video) are there for aesthetic purposes. So my artistic goals with this piece were to just create an immersive virtual space that one could interact with.

Features: movie texture mapping, real-time audio synthesisScott-Face_Swap-MAC.zip

For my face swap patch, I borrowed some ideas from last year's "dog head" and "asteroid tracker" projects as well as getting the idea for large heads from playing "NBA Jam." Being that interactivity was the most important aspect of the project, I wanted to create something that was fun and could be used after the semester was over. Using the color-detection code from "asteroid tracker" I wanted the different goofy celebrity faces to overlay on top of the actual user's to make for some funny combinations. The patch tracks the user's facial position by finding the orange glasses that the user is wearing and increasing or decreasing the size of the overlay face image based on how close the sunglasses are to the webcam. It could be fun in a party or gallery setting where as soon as someone walks in front of the webcam while wearing the glasses, they have this celebrity face slapped on top of theirs.

Features: motion tracking, augmented realitySebastian-Music_Controller-MAC.zip

My patch enabled me to use a wireless usb game controller as a midi controller within Ableton Live. My piece didn't convey the message that I wanted to achieve artistically because it lacked the video aspect. My patch used a Logitech Rumblepad2 controller (selected in puredata) and sent out midi via LoopBe1 internal midi driver into ableton live, then midi paths were connected to effects and samples with live.

Features: game controller inputSinae-Bluescreen_Ocean-PC.zip

My patch was for a part of my performance which content is based on ocean and computer virus. I used ocean sound/music to create ocean environment, and the blue screen graphic that reminds the Blue Screen of death in PC which appears when PC gets a problem.

Features: motion detection, real-time webcam video effects, interactive audio synthesis][3]]2[3]: Students: Aaron, Amanda, Andy, Brooks, Emily, Erim, Eunice, Graham, Ke, Kristine, Maryam, Melody, Sarah, Scott, Sebastian and Sinae.

Aaron-Vertex_Control-PC.zip

Vertex Control is an interactive polyhedron manipulator that resembles a 16-bit cartridge video game. The viewer is presented with a video game style menu system with appropriate instructions. After selecting the basic polyhedron set Platonic Solids or the more complex Catalan Solids the user manipulates the shape with a game controller joystick. To further the wonder of a retro video game experience, a ten second burst mode can be triggered creating a motion blur effect on the solids.

Features: 3D objects, joystick input, menu systemAmanda-Hero_Novel-MAC.zip

Hero is a visual novel game using the options and selection functions. This program does not require much set up as it is a fairly simple program, as the focus is more on the art and story of the game as apposed to the functions.

Features: interactive novel, menu system, keyboard inputAndy-LED_Spectrum_Analyzer.zip

The technical part of this project is the most complex part of this project, which firstly, I need to purchase regular LEDs and resistors (in certain values) and they must be matched to each other. Also it's need a little bit of mathematical practice, which it needs me to plan a little circuit map to place those LEDs, resistors, and jumpers, to connect to Arduino.

The artistic side of this project is that I would like to make an interaction between music and light(LEDs). Ideally, I would like to make a really huge wall of LEDs and let people feel a sense of clubbing. And the audio input could be changed to other style of music to change audiences' moods.

Youtube video of project

Features: arduino, FFT analysis, spectral analysis, LED, beat detectionBrooks-Wiimote_Remixer-MAC.zip

My goal is to create a musical instrument controlled by the Wiimote. The Wii controllers are the remote-shaped controllers of the Nintendo Wii which can be connected very easily to your computer or laptop by Bluetooth. In the controller there are a couple of movement sensors and an infrared camera for tracking IR-LEDs. Upon initialization, the program will play a tune that will be visualized digitally. By using the Wiimotes, the user will be able to remix and change the tune, visualization, and overall sound of the song. This will be achieved by using gesture recognition and an audio application in Pure Data.

Features: movie playback, movie mixing, remixing, audio crossfade, movie crossfade, gesture detection, wiimote, audio, videoEmily-Home_Body-MAC.zip

For this assignment, I layered 4 videos on top of each other, and used the alpha to blend the layers. The layers are controlled by audio input levels, and there are equations that govern the alpha blend based on the volume coming into the computer. When the volume is low, there is a video of a house demolition, with a webcam video in the background. I used a Chroma Key patch to key the webcam video. Overtop, there is a video of a human cadaver dissection with a computer generated video of an animation of the immune system where the immune system is compared to an army. As the volume going into the microphone goes up, the cadaver/immune system overlays the webcam, all within the dark spots of a house being demolished.

Features: audio input, movie layering, movie alpha mask, movie mixing, movie playbackErim-Continuum-MAC.zip

I used pure data with a webcam and FaceOSC software for face recognition and as a switch which interferes with multiple conditions like the sounds, video and model. The patch consists of two phases that includes two background videos, sound mixer, and a 3d model. The first phase is the inactive version of the patch, which means it doesn't get any feedback from the webcam. This part consists of the blacked out shape of the male 3D model, a noisy video loop of some clouds on the background and the white noise sound. When the patch starts to get a feedback the second phase gets activated; the background video and sound changes and the model start to move depending on the person in front of the camera. FaceOSC recognizes face through the webcam feedback and sends different numeric values of face's position and angle to pure data. I used these values to give real time motion to the 3D model to imitate the person's facial position and angle with it.

Features: facial recognition, gesture recognition, FaceOSC, movie playback, 3d model, audio processing, FFT analysisEunice-Motion_Noise-MAC.zip

The project I did was to use motion to control the different pitches and I did it using the motion detection patch from the example list as a base. I made it black and white to make it visually more contrasty. The idea was to how motions can trigger sound and to explore the "stillness" of sound as well. If there are movements, even just a little bit, there will be numerous pitches depending on the motion detector where it is hard to have a monotone since it is hard to get everyone still and not move.

Features: motion detection, video processing, intereactive audio synthesisGraham-Lost_in_Space-MAC.zip

The technical side of this patch is based around a location trigger that uses motion disturbances within the webcams focal spectrum to send bangs to the different camera directions, providing for an 'unlimited' amount of perspectives of the graphic environment. These bangs are routed through a random object before being directed at 1 of 6 possible camera directions. the bangs are also coupled with a delay bang to disengage the camera movement after an interval of between 1 and 3 seconds.

The project was conceived and presented as a performative or "feed back" style of interaction by directing the webcam at the patch's projected image, henceforth triggering bangs based on its own graphics. Alternatively, the patch can be viewed on personal computers, in this case some movement on behalf of the viewer is required to set the camera in motion..

Features: motion detection, 3d objects, real-time audio synthesis, movie texture mapping, camera motionKe_Hu-Dancing_Shapes-MAC.zip

In the final project, I explore the relationship between audio and visual images, three-dimensional geometric images moving in two-dimensional space. It eventually will form the audio visualization.

For the technical aspect, the sound and music I chose is the random dancing songs from radio. So I just used "connect url" to link the url address which depends on the Internet access. In terms of visual images, I will use some cubes and circles as objects. The "repeat" and "rotateXYZ" were used to organize the cubes and circles well on the screen. The interaction between images and music will include the scale, color, the rate of image shaking.

In the early time of the development of interactive images, there were a lot of artists exploring the relationship between 2D space and 3D space, such as John Whitney and John Cage. My goal is to achieve the visual composition and transformation in order to catch up with the controlled randomness, which means, on the one hand, the images are randomly positioned in two-dimensional space, and on the other hand, they are also framed and controlled in a certain range. The audience can play this interactive audio visualization by mouse..

Features: audio mp3 internet input stream, beat detection, 3d objects, vj colour effects, audio visualizerKristine-Beauty_Bounced-MAC.zip

For my final project, I want to use the physics 2d ball script/code. The motion of sweeping away objects will be the centre of this project. Sound will also be distorted with the movement of the ball. Videos will be mixed when the ball hits the walls of the window. The idea behind the this piece is to further distort the image of beauty that is shown through media in our present day.

Features: physics simulation, spring physics, movie playback, collision detection, movie crossfading, real-time audio scratching effectMaryam-Dancing_Puppet-MAC.zip

I create an interactive game called the Dancing Puppet game. In this game the viewer has to make a sheep figure on the page to dance to the beat of the music by placing their hand on one of the 4 squares that get highlighted at the top of the page The figure will not dance unless the right places are pointed at, but the sheep doesn't necessarily point to the same square as the viewer is pointing. In terms of the artistic intentions I'm trying to illustrate the ideas of control through right and wrong and how the viewer will only be able to control the figure if they're right. But at the same time though the viewer thinks he/she is controlling the sheep the sheep reacts differently to what is expected and points at a different direction than the viewer. Maybe hinting at the plans that we make for our life thinking of doing what are write and wrong (if there is one) and the result being something we never expect. Also a hint to how we may all be very sheep like, and a puppet to destiny. On the technical side I started with a motion cell patch. the webcam is used as the main interaction tool that detect which square the viewer decides to place their hand on. The squares get highlighted to the beat of the song.

Features: video game, regional motion detection, multi-texture objectMelody-Quantum-MAC.zip

Puredata and the zip file should be enough to run this patch. When working on this patch I had a few goals in mind. I wanted to create something that related both to Science and to art, and to work on generating a 3D environment. I decided to create an artist interpretation of quantum particles popping in and out of existance while they bend the space around them.

Quantum shows particles which you can alter through the patch. They respond to music input and when forced upwards they bend a space grid bellow them. The background will also become brighter based on the users physical movement (if they have a webcam in their computer).

The technical component for this piece was combining various patches, modifying them and asking them to interact with eachother. There is a wave patch, a beat levels patch, a sphere patch, and a string patch all working to create this environment.

Features: real-time mp3 input audio stream, internet radio, 3d object, dynamic 3d objects, texture mapping, FFT analysisSarah-White_Space-MAC.zip

So the technical part of my project was just to have the sound alter the spinning rotations of the spheres and cubes. The sound can be manipulated to the participants own liking. The other aspects (like the video) are there for aesthetic purposes. So my artistic goals with this piece were to just create an immersive virtual space that one could interact with.

Features: movie texture mapping, real-time audio synthesisScott-Face_Swap-MAC.zip

For my face swap patch, I borrowed some ideas from last year's "dog head" and "asteroid tracker" projects as well as getting the idea for large heads from playing "NBA Jam." Being that interactivity was the most important aspect of the project, I wanted to create something that was fun and could be used after the semester was over. Using the color-detection code from "asteroid tracker" I wanted the different goofy celebrity faces to overlay on top of the actual user's to make for some funny combinations. The patch tracks the user's facial position by finding the orange glasses that the user is wearing and increasing or decreasing the size of the overlay face image based on how close the sunglasses are to the webcam. It could be fun in a party or gallery setting where as soon as someone walks in front of the webcam while wearing the glasses, they have this celebrity face slapped on top of theirs.

Features: motion tracking, augmented realitySebastian-Music_Controller-MAC.zip

My patch enabled me to use a wireless usb game controller as a midi controller within Ableton Live. My piece didn't convey the message that I wanted to achieve artistically because it lacked the video aspect. My patch used a Logitech Rumblepad2 controller (selected in puredata) and sent out midi via LoopBe1 internal midi driver into ableton live, then midi paths were connected to effects and samples with live.

Features: game controller inputSinae-Bluescreen_Ocean-PC.zip

My patch was for a part of my performance which content is based on ocean and computer virus. I used ocean sound/music to create ocean environment, and the blue screen graphic that reminds the Blue Screen of death in PC which appears when PC gets a problem.

Features: motion detection, real-time webcam video effects, interactive audio synthesis -

freakydna

posted in news • read more

freakydna

posted in news • read moreGreetings all,

I have just posted a collection of student patches for an interaction design course I was teaching at Emily Carr University of Art and Design. I hope that the patches will be useful to people playing around with Pure Data in a learning environment, installation artwork and other uses.

The link is: http://bit.ly/8OtDAq

or: http://www.sfu.ca/~leonardp/VideoGameAudio/main.htm#patchesThe patches include multi-area motion detection, colour tracking, live audio looping, live video looping, collision detection, real-time video effects, real-time audio effects, 3D object manipulation and more...

Cheers,

Leonard

Pure Data Interaction Design Patches

These are projects from the Emily Carr University of Art and Design DIVA 202 Interaction Design course for Spring 2010 term. All projects use Pure Data Extended and run on Mac OS X. They could likely be modified with small changes to run on other platforms as well. The focus was on education so the patches are sometimes "works in progress" technically but should be quite useful for others learning about PD and interaction design.

NOTE: This page may move, please link from: http://www.VideoGameAudio.com for correct location.

Instructor: Leonard J. Paul

Students: Ben, Christine, Collin, Euginia, Gabriel K, Gabriel P, Gokce, Huan, Jing, Katy, Nasrin, Quinton, Tony and Sandy

GabrielK-AsteroidTracker - An entire game based on motion tracking. This is a simple arcade-style game in which the user must navigate the spaceship through a field of oncoming asteroids. The user controls the spaceship by moving a specifically coloured object in front of the camera.

Features: Motion tracking, collision detection, texture mapping, real-time music synthesis, game logicGabrielP-DogHead - Maps your face from the webcam onto different dog's bodies in real-time with an interactive audio loop jammer. Fun!

Features: Colour tracking, audio loop jammer, real-time webcam texture mappingEuginia-DanceMix - Live audio loop playback of four separate channels. Loop selection is random for first two channels and sequenced for last two channels. Slow volume muting of channels allows for crossfading. Tempo-based video crossfading.

Features: Four channel live loop jammer (extended from Hardoff's ma4u patch), beat-based video cross-cuttingHuan-CarDance - Rotates 3D object based on the audio output level so that it looks like it's dancing to the music.

Features: 3D object display, 3d line synthesis, live audio looperBen-VideoGameWiiMix - Randomly remixes classic video game footage and music together. Uses the wiimote to trigger new video by DarwiinRemote and OSC messages.

Features: Wiimote control, OSC, tempo-based video crossmixing, music loop remixing and effectsChristine-eMotionAudio - Mixes together video with recorded sounds and music depending on the amount of motion in the webcam. Intensity level of music increases and speed of video playback increases with more motion.

Features: Adaptive music branching, motion blur, blob size motion detection, video mixingCollin-LouderCars - Videos of cars respond to audio input level.

Features: Video switching, audio input level detection.Gokce-AVmixer - Live remixing of video and audio loops.

Features: video remixing, live audio looperJing-LadyGaga-ing - Remixes video from Lady Gaga's videos with video effects and music effects.

Features: Video warping, video stuttering, live audio looper, audio effectsKatyC_Bunnies - Triggers video and audio using multi-area motion detection. There are three areas on each side to control the video and audio loop selections. Video and audio loops are loaded from directories.

Features: Multi-area motion detection, audio loop directory loader, video loop directory loaderNasrin-AnimationMixer - Hand animation videos are superimposed over the webcam image and chosen by multi-area motion sensing. Audio loop playback is randomly chosen with each new video.

Features: Multi-area motion sensing, audio loop directory loaderQuintons-AmericaRedux - Videos are remixed in response to live audio loop playback. Some audio effects are mirrored with corresponding video effects.

Features: Real-time video effects, live audio looperTony-MusicGame - A music game where the player needs to find how to piece together the music segments triggered by multi-area motion detection on a webcam.

Features: Multi-area motion detection, audio loop directory loaderSandy-Exerciser - An exercise game where you move to the motions of the video above the webcam video. Stutter effects on video and live audio looper.

Features: Video stutter effect, real-time webcam video effects -

freakydna

posted in news • read more

freakydna

posted in news • read moreHi all,

I've uploaded a new sampled sound granulation patch to my website at:

http://www.VideoGameAudio.com/

under the 'patches' section:

http://videogameaudio.com/main.htm#patchesIt granulates a loaded sample and allows the grains to be distributed in 5.1 surround. It should be fairly easy for you to use the surround or granulation code in your own work. Let me know if it is useful to you and perhaps give credit when appropriate.

I presented it as part of a demonstration at the GameSoundCon in San Francisco in November 2009 and had several people ask for the code, so here it is.

Cheers,

Leonard J. Paul / VideoGameAudio.com