I recently noticed that on Windows, the USB audio interfaces I use have adjustable buffer sizes analogous to PD's block size (I don't see a corresponding control in OS X though). The default value varies from 64 for the Yamaha/Steinberg USB driver to 512 for the MOTU Audio Console. I wrote a PD patch that does nothing except pass input to output and verified that smaller audio interface buffer sizes for the MOTU result in a proportionally lower input to output latency. It looks like that latency contains some additional overhead (~7mS) not accounted for by the PD delay, PD block size, and audio interface buffer size. What accounts for the additional overhead? Is there any interaction between PD block size and audio interface buffer size? Is there a better way to choose the optimal audio interface buffer size than trial and error? Does that size depend on the specifics of your PD patch?

-

PD block size vs audio interface buffer size

-

Generally, the "blocksize" in the audio setting really is the hardware buffer size and not Pd's global blocksize (which is always 64 samples).

Modern ASIO drivers are multiclient which means that the device can be used by more than one app at the same time. When you set the buffer size in your device app (e.g. Focusrite MixControl) this will set the internal blocksize and consequently the minimum latency possible for all clients. Usually you would want to set the internal buffer size as low as possible (without getting audio glitches).

Individiual clients (e.g. a Pd instance) can request a buffer size from the device. This can't be smaller than the above mentioned internal buffer size, but it can be larger (if you need extra latency). Most DAWs control the latency via the buffer size while Pd has it's own buffering mechanism ("delay").

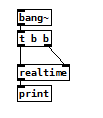

Note that for Pd the hardware buffer size affects the overall timing resolution (when DSP is on). Pd will calculate several blocks of 64 samples as fast as possible until it has enough samples to send to the device. If the hardware buffer size is 256, Pd will calculate 4 blocks in a row (4 * 64 = 256) and then wait until the device asks for another 256 samples. This causes jitter! (Try [bang~] with [realtime] and [print] to see it yourself). The lower the hardware buffer size, the less jitter you get, so generally it's better to keep "blocksize" as low as possible and rather increase "delay" if you need more latency.

BTW, in case of old single client ASIO drivers, "blocksize" would directly set the hardware buffer size of the device. This is still the case e.g. with ASIO4ALL.

-

Thanks. I verified that the blocksize chosen under Audio Settings doesn't affect PD's global blocksize, which is surprising. But I'm trying to understand what you mean about jitter. Is this the test you're suggesting?

If so, I get similarly jittery results no matter what Audio Settings block size I choose. But more to the point, isn't this "jitter" irrelevant? It's just a function of how fast the computer processes each block, what else it has to do concurrently, and how frequently it has to fill the audio interface's output buffer. The fact that each block is processed at irregular intervals isn't necessarily reflected at the audio output, right?